41 one hot encoding vs label encoding

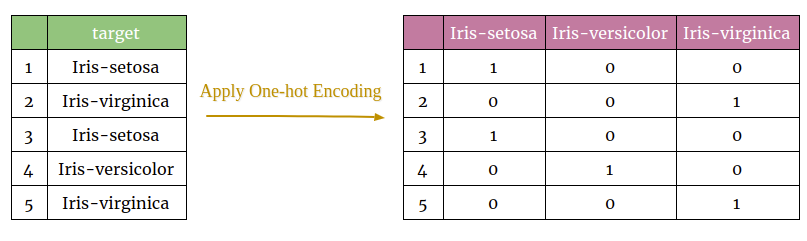

Label Encoder vs. One Hot Encoder in Machine Learning What one hot encoding does is, it takes a column which has categorical data, which has been label encoded, and then splits the column into multiple columns. The numbers are replaced by 1s and 0s,... Encoding categorical columns - Label encoding vs one hot encoding for ... But when I tried both label and one hot encoding on the dataset, one hot encoding gave better accuracy and precision. Can you kindly share your thoughts. The ACCURACY SCORE of various models on train and test are: The accuracy score of simple decision tree on label encoded data : TRAIN: 86.46% TEST: 79.42% The accuracy score of tuned decision ...

Choosing the right Encoding method-Label vs OneHot Encoder Nov 08, 2018 · Let us understand the working of Label and One hot encoder and further, we will see how to use these encoders in python and see their impact on predictions. Label Encoder: Label Encoding in Python can be achieved using Sklearn Library. Sklearn provides a very efficient tool for encoding the levels of categorical features into numeric values.

One hot encoding vs label encoding

Difference between Label Encoding and One-Hot Encoding | Pre-processing ... In one hot encoding, each label is converted to an attribute and the particular attribute is given values 0 (False) or 1 (True). For example, consider a gender column having values Male or M and Female or F. After one-hot encoding is converted into two separate attributes (columns) as Male and Female. Categorical Data Encoding with Sklearn LabelEncoder and OneHotEncoder Label Encoding vs One Hot Encoding. Label encoding may look intuitive to us humans but machine learning algorithms can misinterpret it by assuming they have an ordinal ranking. In the below example, Apple has an encoding of 1 and Brocolli has encoding 3. But it does not mean Brocolli is higher than Apple however it does misleads the ML algorithm. Machine learning feature engineering: Label encoding Vs One-Hot ... In this tutorial, you will learn how to apply Label encoding & One-hot encoding using Scikit-learn and pandas. Encoding is a method to convert categorical va...

One hot encoding vs label encoding. Binary Encoding vs One-hot Encoding - Cross Validated 1 Answer. If you have a system with n different (ordered) states, the binary encoding of a given state is simply it's rank number − 1 in binary format (e.g. for the k th state the binary k − 1 ). The one hot encoding of this k th state will be a vector/series of length n with a single high bit (1) at the k th place, and all the other bits ... Why One-Hot Encode Data in Machine Learning? Let's say I have a column of categorical data with 3 unique values like France, Germany, Spain. So after label encoding and one hot encoding, I get three additional columns that have a combination of 1s and 0s. I read somewhere in the Internet that just label encoding gives the algorithm an impression that the values in the column are related. One Hot Encoding VS Label Encoding | by Prasant Kumar - Medium Here we use One Hot Encoders for encoding because it creates a separate column for each category, there it defines whether the value of the category is mentioned for a particular entry or not by... Label encoding vs Dummy variable/one hot encoding - correctness? 1 Answer. It seems that "label encoding" just means using numbers for labels in a numerical vector. This is close to what is called a factor in R. If you should use such label encoding do not depend on the number of unique levels, it depends on the nature of the variable (and to some extent on software and model/method to be used.) Coding ...

label encoding vs one hot encoding | Data Science and Machine Learning ... In label encoding, we label the categorical values into numeric values by assigning each category to a number. Say, our categories are "pink" and "white" in label encoding we will be replacing 1 with pink and 0 with white. This will lead to a single numerically encoded column. Whereas in one-hot encoding, we end up with new columns. Encoding Categorical Variables: One-hot vs Dummy Encoding Dec 16, 2021 · This is because one-hot encoding has added 20 extra dummy variables when encoding the categorical variables. So, one-hot encoding expands the feature space (dimensionality) in your dataset. Implementing dummy encoding with Pandas. To implement dummy encoding to the data, you can follow the same steps performed in one-hot encoding. Target Encoding Vs. One-hot Encoding with Simple Examples Jan 16, 2020 · Label Encode (give a number value to each category, i.e. cat = 0) — shown in the ‘Animal Encoded’ column in Table 3. ... One-hot encoding works well with nominal data and eliminates any ... Label Encoder vs One Hot Encoder in Machine Learning [2022] One hot encoding takes a section which has categorical data, which has an existing label encoded and then divides the section into numerous sections. The volumes are rebuilt by 1s and 0s, counting on which section has what value. The one-hot encoder does not approve 1-D arrays. The input should always be a 2-D array.

Feature Engineering: Label Encoding & One-Hot Encoding - Fizzy The categorical data are often requires a certain transformation technique if we want to include them, namely Label Encoding and One-Hot Encoding. Label Encoding. What the Label Encoding does is transform text values to unique numeric representations. For example, 2 categorical columns "gender" and "city" were converted to numeric values, a ... When to use One Hot Encoding vs LabelEncoder vs DictVectorizor? Still there are algorithms like decision trees and random forests that can work with categorical variables just fine and LabelEncoder can be used to store values using less disk space. One-Hot-Encoding has the advantage that the result is binary rather than ordinal and that everything sits in an orthogonal vector space. Label Encoding vs One Hot Encoding | by Hasan Ersan YAĞCI | Medium Label Encoding and One Hot Encoding 1 — Label Encoding Label encoding is mostly suitable for ordinal data. Because we give numbers to each unique value in the data. If we use label encoding in... What are the pros and cons of label encoding categorical features ... Answer: If the cardinality (the # of categories) of the categorical features is low (relative to the amount of data) one-hot encoding will work best. You can use it as input into any model. But if the cardinality is large and your dataset is small, one-hot encoding may not be feasible, and you m...

One-hot Encoding vs Label Encoding - Vinicius A. L. Souza The main reason why we would use one-hot encoding over label encoding is for situations where each category has no order nor relationship. On a ML model, a larger number can be seen as having a higher priority, which might not be the case. One-hot encoding guarantees that each category is seen with the same priority.

One hot encoding vs label encoding in Machine Learning Apr 30, 2022 · Difference between One-Hot encoding and Label encoding; Label encoding python; Assignment; Endnotes; Many of you might be confused between these two — Label Encoder and One Hot Encoder. The basic purpose of these two techniques is the same i.e. conversion of categorical variables to numerical variables. But the application is different. So ...

Dropping one of the columns when using one-hot encoding Aug 23, 2016 · $\begingroup$ Does keeping all k values theoretically make them weaker features.No (though I'm not 100% sure what you mean by "weaker"). using something like PCA Note, just in case, that PCA on a set of dummies representing one same categorical variable has little practical point because the correlations inside the set of dummies reflect merely the relationships among the category frequencies ...

When to Use One-Hot Encoding in Deep Learning? One hot encoding is a highly essential part of the feature engineering process in training for learning techniques. For example, we had our variables like colors and the labels were "red," "green," and "blue," we could encode each of these labels as a three-element binary vector as Red: [1, 0, 0], Green: [0, 1, 0], Blue: [0, 0, 1].

Difference between Label Encoding and One Hot Encoding Conclusion Use Label Encoding when you have ordinal features present in your data to get higher accuracy and also when there are too many categorical features present in your data because in such scenarios One Hot Encoding may perform poorly due to high memory consumption while creating the dummy variables.

Label Encoder vs. One Hot Encoder in Machine Learning To avoid this, we 'OneHotEncode' that column. What one hot encoding does is, it takes a column which has categorical data, which has been label encoded, and then splits the column into multiple columns. The numbers are replaced by 1s and 0s, depending on which column has what value. In our example, we'll get three new columns, one for ...

ML | One Hot Encoding to treat Categorical data parameters May 27, 2022 · One Hot Encoding using Sci-kit learn Library: One hot encoding algorithm is an encoding system of Sci-kit learn library. One Hot Encoding is used to convert numerical categorical variables into binary vectors. Before implementing this algorithm. Make sure the categorical values must be label encoded as one hot encoding takes only numerical ...

Label Encoding vs. One Hot Encoding | Data Science and Machine Learning ... One Hot Encoding Categorical Encoder Label Encoding In previous sections, we did the pre-processing for continuous numeric features. But, our data set has other features too such as Gender, Married, Dependents, Self_Employed and Education. All these categorical features have string values. For example, Gender has two levels either Male or Female.

One-Hot Encoding - an overview | ScienceDirect Topics One important decision in state encoding is the choice between binary encoding and one-hot encoding. With binary encoding, as was used in the traffic light controller example, each state is represented as a binary number. Because K binary numbers can be represented by log 2 K bits, a system with K states needs only log 2 K bits of state.

Post a Comment for "41 one hot encoding vs label encoding"